Source Generators - real world example

In this post I will show you how you can generate code using new .NET feature called Source Generators. I will show you complete real world example with testing, logging and debugging which I took from several sources and figure out by experiments.

Updates

- 15.11.2020 - Added information about intellisense fix

- 10.07.2021 - Add nuget package and update IDE support

Introduction

This is the first approach to blogging and I took some effort to make it good but as any product it could have some early stage of product errors. Also I want to note that in the post I will be referring to Source Generators as SG to simplify.

- Updates

- Introduction

- History

- Why

- Basic generator

- Analyze - Compilation

- Analyze - Additional Files

- Template

- Testing

- Msbuild

- Logging

- Debugging

- IDE support

- Nuget package

- Summary

- Useful links

Ok, let’s go to the meat!

History

Generating code was available in .NET from the beginning, but it always had some drawbacks. We had

- CodeDom which was available since 1.0. It has smaller set of functionality than Roslyn gives and it is out of process because csc.exe is used behind the scene. Additionally it tries to abstract all languages (c# / Vb.NET) and because of that you cant use language specific features of given language. This API is now deprecated by Roslyn and SourceGenerators

- T4 allows to generate code by having template written in T4 notation, this template is used by engine with some input data (file or database etc.) to generate code. This technology was commonly used and is definitely not dead but Microsoft is slowly giving up on this technique in new products. The other thing is to mark that T4 is only template engine so it could be theoretically used in source generator but I haven’t saw samples to do so. Source generators are little bit different than just template engine.

- Injecting IL - there are two main tools for this technique. One commercial named Postsharp and open source project Fody. Both use technique named code weaving to inject IL code in build process. This technique allowed us to do Aspect Oriented Programming (AOP) for many years but it has main drawback. Generated code is some kind of black box - something is injected into the code but IDE and compiler is not aware of this code so when you will try to debug very weird things can happened. Because of this magic many people don’t want to introduce this techniques to their projects - they are simply not pure code that anybody can navigate to.

- Roslyn based tools before source generators - there are two in my knowledge: CodeGeneration.Roslyn and Uno.SourceGeneration. I have used first one and I was rather pleased but it has some drawbacks. Main problem that I see now when comparing to SG is the part when code is generated - in those tools you have to build code with Roslyn objects. It requires you to have good Roslyn knowledge but you can use tools like this to speed up your work: https://roslynquoter.azurewebsites.net/. I have written many lines of code with Roslyn and was happy that it is working but honestly after one day I have to learn it again because it is so complicated. I really like clean code but code written in Roslyn is one I’m ashamed of - Check it out. Maybe you can clean this code but it would be definitely not nice to work with.

Why

So why we need to generate code? This approach have some useful use cases

- We constantly have to write boilerplate code and we could automate this by using SG

- We have some data files and we need to parse them to have some strongly typed code

- We have some GUI that is based on the file generated by IDE. It could be binary or declarative language - this code can be interpreted by generator

- We have some code based on reflection which could be slow in runtime - we can generate code in build process and make runtime fast. Microsoft has already some ideas to improve serialization, GRPC and other code in codebase.

those are few examples but I think that there are many of them and they will start to grow when people will understand what kind of tool they have in their hands.

There is already discussion how SG can be used in .NET itself

Basic generator

You have to note that .NET 5 SDK and VisualStudio/Msbuild 16.8 is required to build the project but there are no limitations I’m aware of that prohibit of using SG outside .NET 5. Samples provided by MS on github are using .NET 3.1 as runtime so it should work in you current code.

When building your projects using CLI it is the best to use the latest Msbuild - I had issues even with version 16.8.0 integrated in ‘dotnet build’ and had to use MsBuild directly (16.8.2) to resolve an error with SG not finding references to local projects (they worked in VS but dotnet CLI failed to build)

Source Generators are part of the Roslyn family tools. Roslyn has great possibilities - it allows you to write code analyzers and fixes to guard your code, perform whole compile process inside your code (I will show this technique later) and many others. Now with .NET 5 Roslyn SDK will be equipped with new features named Source Generators.

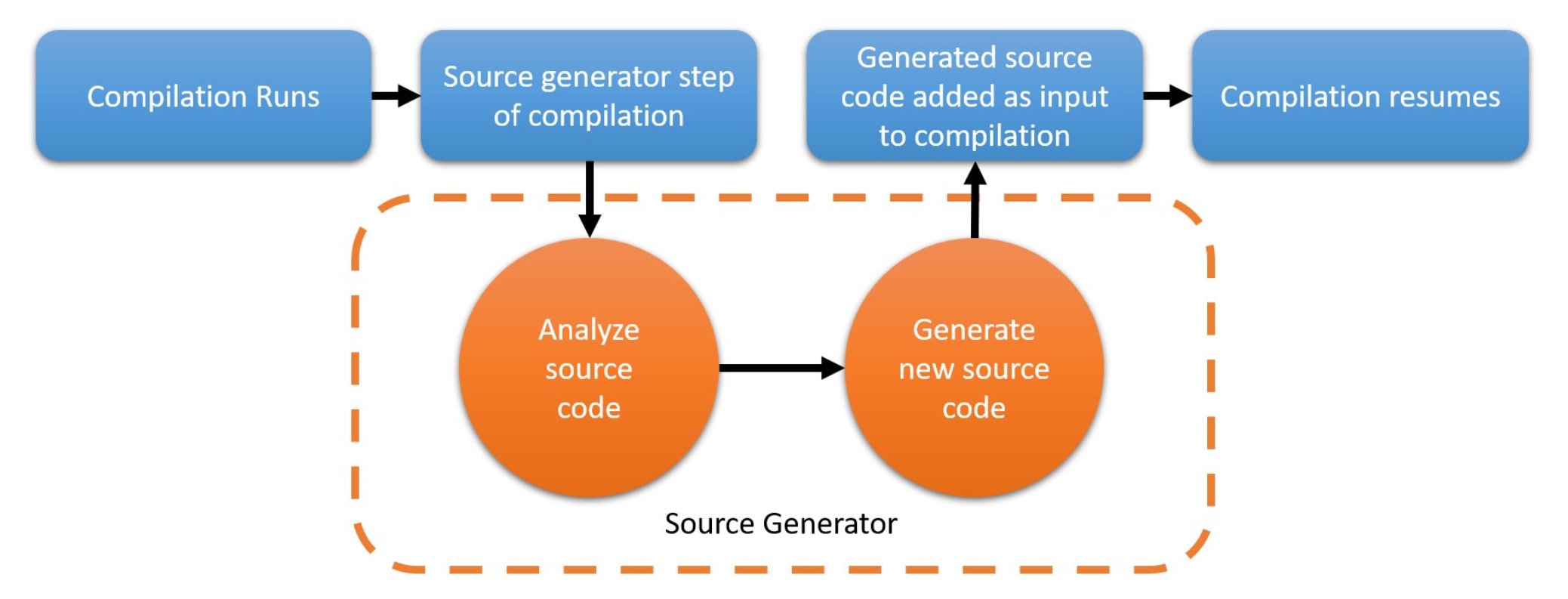

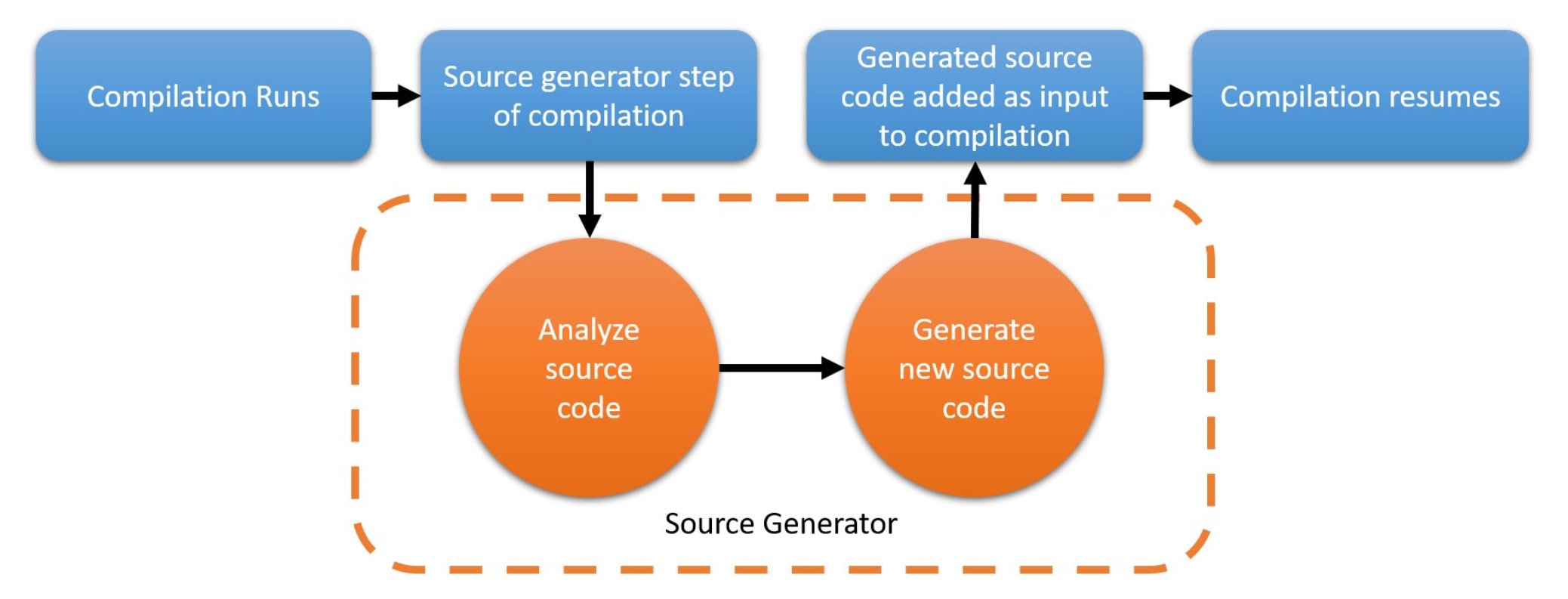

SG are now part of compilation process and allows you to inject into code compilation process. As a programmer you have Roslyn compilation tree as input and you can add something to this compilation. One important thing is that Microsoft is not allowing to change anything so you could only add something to code but not change existing code.

To start with SG you have to create .NET Standard 2.0 project with nuget reference to Microsoft.CodeAnalysis.CSharp in version minimum 3.8. The simplest code looks like below. Note you have to inherit from ISourceGenerator and decorate class with Generator attribute. Next you can generate source code using compilation or additional files which we will take about later. And this is basically all.

1

2

3

4

5

6

7

8

9

10

11

12

[Generator]

public class ActorProxySourceGenerator : ISourceGenerator

{

public void Initialize(GeneratorInitializationContext context)

{

}

public void Execute(GeneratorExecutionContext context)

{

context.AddSource(identifier, generatedCode);

}

}

Note that currently only C# is supported - VB.NET will probably be added but F# is different and now it is not planned to support SG.

So in contrast to currently existing techniques you can use any template engine like T4 or other to generate code - in our example I will use Scriban. It can be used to “feed” AddSource method using generatedCode parameter. The other parameter named identifier has to be unique and it identifies generated code. Initialize method currently allows registering analyzers - this will be explained in next section.

Now having the basic knowledge we can dig deeper in each aspect. We will refer to complete example you could find here

Analyze - Compilation

One of the main part of source generation is to interpret some input before we generate code. It is good practices to separate this process and build model that will be used in next step. One input we can use is Compilation on its own. Having this object you have access to Syntax Trees and SematicModels of whole solution. I will not dig deep into those topics because it is candidate for separate article. In short SyntaxTree a model of your source code so everything you see in source code you will see in them. But remember that Syntax Trees are only parsed text and are not aware of full model that is available in semantic model. For example when you have class with some base you could get the name of the base class but if you would try to tell something more about this base class you can’t have only syntax tree of your class because it has only a name. You could search in all syntax trees and find what you want by getting syntax tree of base class but there is better way. By getting semantic model you will get something more because semantic model is now interpreted and you have access to types and you can, for example, go to whole base types chain of your class. To get semantic model from syntax tree you have to simply use

1

compilation.GetSemanticModel(classSyntax.SyntaxTree)

Microsoft is recommending to search the compilation using object called SyntaxReceivers. These objects use visitor patterns and during compilation process each receiver is iterating on all SyntaxNodes (syntax tree as name sounds is a tree of code elements and each node of this tree is SyntaxNode). Your custom receiver can decide if this node is candidate for code generation. In our example we have some custom attribute named ProxyAttribute - when found on the class it is saved to internal list.

1

2

3

4

5

6

7

8

9

10

11

12

internal class ActorSyntaxReceiver : ISyntaxReceiver

{

public List<ClassDeclarationSyntax> CandidateProxies { get; } = new List<ClassDeclarationSyntax>();

public void OnVisitSyntaxNode(SyntaxNode syntaxNode)

{

if (syntaxNode is ClassDeclarationSyntax classSyntax && classSyntax.HaveAttribute(ProxyAttribute.Name))

{

CandidateProxies.Add(classSyntax);

}

}

}

Note that we have some extensions to Roslyn that simplify writing the code - you can find them here

You can register receiver in Initialize method of generator.

1

2

3

4

public void Initialize(GeneratorInitializationContext context)

{

context.RegisterForSyntaxNotifications(() => new ActorSyntaxReceiver());

}

When Execute method is called you can access your receiver by SyntaxReceiver property of given GeneratorExecutionContext and next you could iterate by all classes you have found.

1

2

3

4

5

6

if (context.SyntaxReceiver is ActorSyntaxReceiver actorSyntaxReciver)

{

foreach (var proxy in actorSyntaxReciver.CandidateProxies)

{

}

}

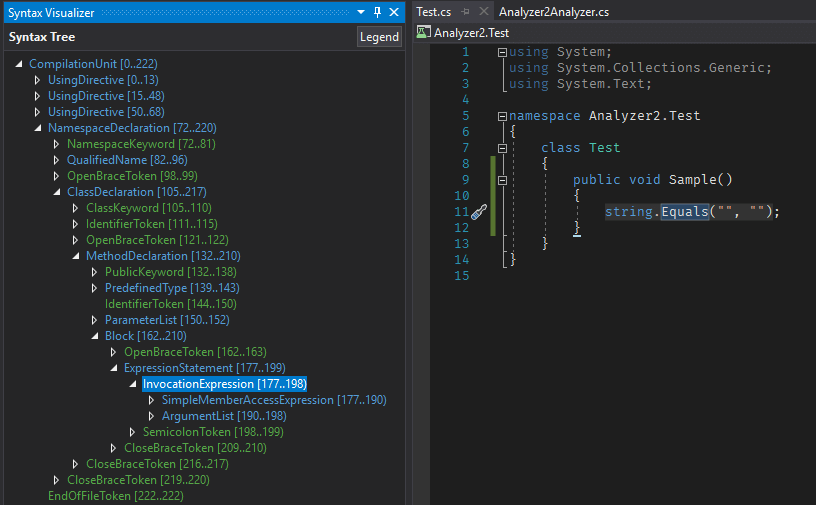

When you start playing with syntax trees it is hard to dig through all that Roslyn objects. I recommend to install .NET Compiler Platform SDK - it is available as separate component in Visual Studio Installer. After installing it in VS you have SyntaxVisualizer window that will visualize each file open currently in IDE. This gives you a quick way to see how syntax tree is structured.

In our example we have GetModel method that is extracting all information to build model. As I mentioned earlier I will not explain whole code - you should do this as an exercise to see how this could be done.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

private ProxyModel GetModel(ClassDeclarationSyntax classSyntax, Compilation compilation)

{

var root = classSyntax.GetCompilationUnit();

var classSemanticModel = compilation.GetSemanticModel(classSyntax.SyntaxTree);

var classSymbol = classSemanticModel.GetDeclaredSymbol(classSyntax);

var proxyModel = new ProxyModel

{

ClassBase = classSyntax.GetClassName(),

ClassName = $"{classSyntax.GetClassName()}{ProxyAttribute.Name}",

ClassModifier = classSyntax.GetClassModifier(),

Usings = root.GetUsings(),

Namespace = root.GetNamespace(),

Commands = GetMethodWithParameter(classSyntax, classSemanticModel, nameof(Command)),

Queries = GetMethodWithParameter(classSyntax, classSemanticModel, nameof(Query)),

Events = GetMethodWithParameter(classSyntax, classSemanticModel, nameof(Event)),

ConstructorParameters = GetConstructor(classSymbol),

InjectedProperties = GetInjectedProperties(classSymbol),

Subscriptions = GetSubscriptions(classSyntax, classSemanticModel)

};

return proxyModel;

}

Analyze - Additional Files

CG gives additional ways for developers to gather information and generate model based on them. When using a generator you have special section named AdditionalFiles in your csproj

1

2

3

4

<ItemGroup>

<AdditionalFiles Include="People.csv" CsvLoadType="Startup" />

<AdditionalFiles Include="Cars.csv" CsvLoadType="OnDemand" CacheObjects="true" />

</ItemGroup>

You can add all the files you want in that section and then you could read them by accessing context.AdditionalFiles. This collection have objects with Path property that shows path to the file and GetText() method that gives you content. Having this information you can basically do anything - for example parse json/xml/csv and build model you like.

For more advance sample you cane read New C# Source Generator Samples

Template

I told you before that real power of SG is possibility to use any template engine. For this example after some research I found that for generating c# best way will be use Scriban. I chose it because it has powerful template features and performance is very good and measured.

Note that performance is not a most important thing because code generation is one-time job but good performance is always right way to distinguish from.

In our example we prepared template:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

{{ for using in Usings ~}}

using {{ using }};

{{ end ~}}

namespace {{Namespace}}

{

[GeneratedCode]

{{~ ClassModifier }} sealed class {{ClassName}} : {{ClassBase}}

{

public {{ClassName}}({{ for par in Constructor }} {{par.Type}} {{par.Name}}{{ if !for.last}},{{ end -}}{{- end }}) : base({{ for par in BaseConstructor }}{{par}}{{ if !for.last}},{{ end -}}{{- end }})

{

{{ for prop in InjectedProperties ~}}

{{ prop.Destination }} = {{ prop.Source }};

{{ end ~}}

}

protected async override Task ReceiveAsyncInternal(Proto.IContext context)

{

if (await HandleSystemMessages(context))

return;

var msg = FormatMessage(context.Message);

if (msg is ActorMessage ic)

{

ic.Context = context;

}

{{- "\n" -}}

{{- #---------------- Generate list of command handlers ------------------------}}

{{ for command in Commands }}

{{ if for.index == 0 }}if{{ else }}else if{{ end -}}

(msg is {{command.ParameterType}} command_{{for.index}})

{

{{ if command.IsReturnTask }}await {{ end }}{{command.MethodName}}(command_{{for.index}});

return;

}

{{- end }}

{{- #---------------- Generate list of command handlers END --------------------}}

await UnhandledMessage(msg);

}

}

}

The main purpose of what is generated is not that important - it is just a proxy class that will automatically implement ReceiveAsyncInternal method from base class by scanning all methods with Command/Query/Event as input.

When comparing this code to code generated manually in Roslyn I showed on the beginning it is like comparing day to night. You can see exactly what code will be generated. Now you can also understand why I spoke about generating models in first place. When you have good model now everything you should do is put properties in right place and you are done.

I will not dig into template syntax because you could read about this in documentation but for short introduction - you are accessing model properties using two angle brackets {{ }} . For iterating you use {{for element in model.Collection}} . I also used special for properties like for.end or for.index that gives information saying if we are currently in last iteration or index of the iteration. I also used ’-‘ and ’~’ to format whitespaces and # sign to add comments. This is basically all functionality I have used - I suggest to read about all functionality of Scriban for your own.

Having a model and template you just execute engine and get result

1

2

3

4

5

6

7

8

9

10

11

12

13

14

var template = Template.Parse(templateString);

if(template.HasErrors)

{

var errors = string.Join(" | ", template.Messages.Select(x => x.Message));

throw new InvalidOperationException($"Template parse error: {template.Messages}");

}

var result = template.Render(model, memberRenamer: member => member.Name);

result = SyntaxFactory.ParseCompilationUnit(result)

.NormalizeWhitespace()

.GetText()

.ToString();

Additionally we are checking if parsing of template is successful and use other Roslyn feature to format the code (NormalizeWhitespace) - yes you could to this kind of things also ;)

Note that by default Scriban is using small first letters in properties even when you have big in your model. To change this we used override member => member.Name

One thing to note is a way we are storing our templates - I saw samples by having them as string assigned to variables in same code we have our model and rest of the code. Personally I like to put templates in separate files - it is cleaner and all alignments like spaces/tabs are easier to format. The only thing you should do in this approach is to change those file Build Action to Embedded Resources and read them using some helper code. In later section I will show how to add all files we want as Embedded Resources using MsBuild way.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

internal class ResourceReader

{

public static string GetResource<TAssembly>(string endWith) => GetResource(endWith, typeof(TAssembly));

public static string GetResource(string endWith, Type assemblyType = null)

{

var assembly = GetAssembly(assemblyType);

var resources = assembly.GetManifestResourceNames().Where(r => r.EndsWith(endWith));

if (!resources.Any()) throw new InvalidOperationException($"There is no resources that ends with '{endWith}'");

if (resources.Count() > 1) throw new InvalidOperationException($"There is more then one resource that ends with '{endWith}'");

var resourceName = resources.Single();

return ReadEmbededResource(assembly, resourceName);

}

private static Assembly GetAssembly(Type assemblyType)

{

Assembly assembly;

if (assemblyType == null)

{

assembly = Assembly.GetExecutingAssembly();

}

else

{

assembly = Assembly.GetAssembly(assemblyType);

}

return assembly;

}

private static string ReadEmbededResource(Assembly assembly, string name)

{

using var resourceStream = assembly.GetManifestResourceStream(name);

if (resourceStream == null) return null;

using var streamReader = new StreamReader(resourceStream);

return streamReader.ReadToEnd();

}

}

Having code generated we are basically done in development of main component but there are many important aspect to say about.

Testing

Testing is very important part of every developer process. When writing code generators it is must have for me. I wrote my code using UnitTest first approach and when I was done I started to integrate this code in application. In this section I will show how using Roslyn you can prepare test environment that allows you to execute SG in isolation.

Every test should have AAA (Arrange, Act, Assert) structure - when testing generators we should have input and output classes for arranging and assert part because our test will read those classes and test if we generated expected output. Same as with templates I prefer to put input and output as separate files from which I’m reading them in my test. When comparing I’m using extension method AssertSourceCodesEquals that is removing all white spaces and compare code without them - this is because we don’t want our tests fail because of difference in some white spaces.

Our ‘Act’ part of the test is using two important things. First is one feature of Roslyn I was talking on the beginning - we can do code compilation inside code itself because that Roslyn is a compiler as a service. This process is very straightforward - we just give list of source codes of our project, add additional references we are using - in the example I have added all assemblies from solution by project name to filter out all the rest.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

private static Compilation CreateCompilation(string source)

{

var syntaxTrees = new[]

{

CSharpSyntaxTree.ParseText(source, new CSharpParseOptions(LanguageVersion.Preview))

};

var references = new List<PortableExecutableReference>();

foreach (var library in DependencyContext.Default.RuntimeLibraries.Where(lib => lib.Name.IndexOf("HomeCenter.") > -1))

{

var assembly = Assembly.Load(new AssemblyName(library.Name));

references.Add(MetadataReference.CreateFromFile(assembly.Location));

}

references.Add(MetadataReference.CreateFromFile(typeof(Binder).GetTypeInfo().Assembly.Location));

var options = new CSharpCompilationOptions(OutputKind.DynamicallyLinkedLibrary);

var compilation = CSharpCompilation.Create(nameof(GeneratorRunner), syntaxTrees, references, options);

return compilation;

}

Note that I’m using .NET Core feature DependencyContext.Default.RuntimeLibraries that gives me all libs used in solution - this approach don’t have a drawback of other methods that get only assemblies already used so in runtime.

Next we add some core assembly get from type typeof(Binder) and create compilation. Remember then adding all dependent libraries is important because when something is not in tree, compilation is created but you will get empty result for some searches - for example I was searching for base class in semantic model and without all dependent assemblies I just have null result.

Having compilation in place we can use CG class CSharpGeneratorDriver.Create to run our custom SG and get results.

1

2

3

4

5

6

7

Compilation compilation = CreateCompilation(sourceCode);

var driver = CSharpGeneratorDriver.Create(ImmutableArray.Create(generators),

ImmutableArray<AdditionalText>.Empty,

(CSharpParseOptions)compilation.SyntaxTrees.First().Options,

null);

driver.RunGeneratorsAndUpdateCompilation(compilation, out var outputCompilation, out var diagnostics);

We can than compare generated output or check diagnostic outputs.

1

2

3

4

5

6

7

8

9

10

[Fact]

public async Task ProxyGeneratorTest()

{

var userSource = await File.ReadAllTextAsync(@"..\..\..\TestInputs\TestAdapter.cs");

var expectedResult = await File.ReadAllTextAsync(@"..\..\..\TestOutputs\TestAdapterOutput.cs");

var result = GeneratorRunner.Run(userSource, new ActorProxySourceGenerator());

expectedResult.AssertSourceCodesEquals(result.GeneratedCode);

}

With this infrastructure in place we can write our custom SG’s and test them without using them in actual code. When everything is tasted we can go into the wild but there are still some important topics.

Msbuild

Note that things described below are in context of using SG as project reference - I don’t have time to play with nuget approach in this version.

Apart integration with Roslyn we have integration with MsBuild. To use our custom SG we have to use it in another project - we cannot define and use SG in one project.

In the preview we have to use preview to compile our project until final release

1

<LangVersion>preview</LangVersion>

Next thing is when we are adding reference to SG we have to add OutputItemType and ReferenceOutputAssembly attributes to indicate that we are using this code only as build time feature and we don’t want those libs in final solutions - it is because after code generation we don’t need SG in production code.

1

<ProjectReference Include="..\HomeCenter.SourceGenerators\HomeCenter.SourceGenerators.csproj" OutputItemType="Analyzer" ReferenceOutputAssembly="false" />

Additionally we can use variables defined in our project file (that project that is using SG) and read them in SG. For example we have defined couple variables

1

2

3

<SourceGenerator_EnableLogging>True</SourceGenerator_EnableLogging>

<SourceGenerator_EnableDebug>False</SourceGenerator_EnableDebug>

<EmitCompilerGeneratedFiles>true</EmitCompilerGeneratedFiles>

to allow reading them we have to mark them as CompilerVisibile

1

2

3

<CompilerVisibleProperty Include="SourceGenerator_EnableLogging" />

<CompilerVisibleProperty Include="SourceGenerator_DetailedLog" />

<CompilerVisibleProperty Include="SourceGenerator_EnableDebug" />

after this we can use code like below to read properties using context.AnalyzerConfigOptions by name ‘build_property.ProperyName’. We can also add some additional attributes to additional files ane read them in similar matter ‘build_metadata.AdditionalFiles.PropertyName’

1

2

3

4

5

6

7

8

9

public bool TryReadGlobalOption(GeneratorExecutionContext context, string property, out string value)

{

return context.AnalyzerConfigOptions.GlobalOptions.TryGetValue($"build_property.{property}", out value);

}

public bool TryReadAdditionalFilesOption(GeneratorExecutionContext context, AdditionalText additionalText, string property, out string value)

{

return context.AnalyzerConfigOptions.GetOptions(additionalText).TryGetValue($"build_metadata.AdditionalFiles.{property}", out value);

}

There is also a way to add this properties to .editorconfig file but to be visible in global section of context.AnalyzerConfigOptions we have to mark .editorconfig as ‘is_global = true’. When we don’t use this switch property is available only for syntax tree part of AnalyzerConfigOptions. What is this? Configuration can be global (first in example), can be get by AdditionalFile reference or syntaxtrees. For a beginning it is hard to dig into this and that is why I wrote some code that dumps all config values - it is part of the logging process described below.

Important thing is that this code is using reflection because MS decided to hide this so it is for learning/debugging purposes but it can break in future versions.

Having this dump we can easily see all variables we can use and see if we properly defined our custom variables. For example we see our sourcegenerator_enablelogging from csproj and mygenerator_emit_loggingxxxxxx from .editorconfig

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

-> Global options:

mygenerator_emit_loggingxxxxxx:true

build_property.includeallcontentforselfextract:

build_property.projecttypeguids:

build_property.sourcegenerator_enablelogging:True

build_property.targetframework:net5.0

build_property.targetplatformminversion:

build_property.sourcegenerator_detailedlog:True

build_property.publishsinglefile:

build_property._supportedplatformlist:Android,iOS,Linux,macOS,Windows

build_property.usingmicrosoftnetsdkweb:

-> Options:

- ParsedSyntaxTree[E:\Projects\SourceGenerators\SourceGenerators\HomeCenter.SourceGenerators\HomeCenter.Example\obj\Debug\net5.0\HomeCenter.Example.AssemblyInfo.cs]:

mygenerator_emit_loggingxxxxxx:true

indent_size:4

build_property.includeallcontentforselfextract:

dotnet_separate_import_directive_groups:false

dotnet_sort_system_directives_first:false

dotnet_naming_rule.instance_fields_should_be_camel_case.symbols:instance_fields

build_property.projecttypeguids:

indent_style:space

build_property.sourcegenerator_enablelogging:True

dotnet_naming_rule.instance_fields_should_be_camel_case.style:instance_field_style

dotnet_naming_rule.instance_fields_should_be_camel_case.severity:suggestion

build_property.targetframework:net5.0

build_property.targetplatformminversion:

dotnet_naming_style.instance_field_style.capitalization:camel_case

build_property.sourcegenerator_detailedlog:True

mygenerator_emit_logging2:true

dotnet_naming_symbols.instance_fields.applicable_kinds:field

build_property.publishsinglefile:

build_property._supportedplatformlist:Android,iOS,Linux,macOS,Windows

build_property.usingmicrosoftnetsdkweb:

dotnet_naming_style.instance_field_style.required_prefix:_

The next thing in Msbuild topic is having automatically change all files with template extensions to Embedded Resources - we can do this in Directory.Build.Props - these files allow us add common settings to all project files in solution. It is convenient way to have some common place and not repeat ourselves in each file.

Keep in mind that those files are not hierarchical by design so when we put one file like this it applies to all projects below directory tree but it stops on this file (unlike .editorconfig which read all files down to root drive and merge results). We can change this behavior by using technique

1

<Import Project="$([MSBuild]::GetPathOfFileAbove('Directory.Build.props', '$(MSBuildThisFileDirectory)../'))" />

Using msbuild we could write something like this to make all files with extension .scriban as EmbeddedResource

1

2

3

<ItemGroup>

<EmbeddedResource Include="@(None -> WithMetadataValue('Extension', '.scriban'))" />>

</ItemGroup>

The last thing in this topic is using additional libraries in our SG.

Solution descried below is only needed when we are using project references. With nuget situation is different.

In our SG we are using Scriban and when we try to use our SG in some project we will have error that Scriban library is not found. We can fix that manually using approach from sample

1

2

3

4

5

6

7

8

9

10

11

<ItemGroup>

<PackageReference Include="Newtonsoft.Json" Version="12.0.1" GeneratePathProperty="true" PrivateAssets="all" />

</ItemGroup>

<PropertyGroup>

<GetTargetPathDependsOn>$(GetTargetPathDependsOn);GetDependencyTargetPaths</GetTargetPathDependsOn>

</PropertyGroup>

<Target Name="GetDependencyTargetPaths">

<ItemGroup>

<TargetPathWithTargetPlatformMoniker Include="$(PKGNewtonsoft_Json)\lib\netstandard2.0\Newtonsoft.Json.dll" IncludeRuntimeDependency="false" />

So we have to mark nuget reference with GeneratePathProperty=”true” and then use generated name PKGNewtonsoft_Json in TargetPathWithTargetPlatformMoniker (there is PKG prefix and dots are changed to _).

Alternatively we can automate this like me. We only just add attribute I created LocalSourceGenerators and with some MsBuild magic we can automatically add all dependencies.

1

<PackageReference Include="Scriban" Version="2.1.4" PrivateAssets="all" LocalSourceGenerators="true" />

Logging

When creating this kind of code for a first time it is hard to spot errors so some diagnostic is very helpful. When we have proper code all we need is use

1

2

<EmitCompilerGeneratedFiles>true</EmitCompilerGeneratedFiles>

<CompilerGeneratedFilesOutputPath>$(BaseIntermediateOutputPath)Generated</CompilerGeneratedFilesOutputPath>

in project that is using our SG. After this switch is set we can find generated code in our obj/generated folder of this project but for me this is not enough. This is why I created logging mechanism that we will use to

- log generated files

- log dump of all options (described previously - this is only available when detailed logging is turned on by SourceGenerator_DetailedLog=true)

- log errors when generation fails

Logging is only available when SourceGenerator_EnableLogging is set - we can also alter path where log file is generated SourceGenerator_LogPath (default is obj folder of our SG). Logger sources can be found here

Additionally when something wrong is happening we can use Diagnostic available via ReportDiagnostic function of our context. We can even attach information from this diagnostic to specific line of code.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

public void TryLogSourceCode(ClassDeclarationSyntax classDeclaration, string generatedSource)

{

Diagnostic.ReportInformation(classDeclaration.GetLocation());

Logger.TryLogSourceCode(classDeclaration, generatedSource);

}

public void ReportInformation(Location location = null)

{

if (location == null)

{

location = Location.None;

}

_generatorExecutionContext.ReportDiagnostic(Diagnostic.Create(_infoRule, location, typeof(T).Name));

}

We can see errors in VS:

Last thing in our example is code that will generate empty class when something wrong is happening with some error details - this some additional step for quick look for error details.

1

2

3

4

5

6

7

8

9

public GeneratedSource GenerateErrorSourceCode(Exception exception, ClassDeclarationSyntax classDeclaration)

{

var context = $"[{typeof(T).Name} - {classDeclaration.Identifier.Text}]";

var templateString = ResourceReader.GetResource("ErrorModel.cstemplate");

templateString = templateString.Replace("//Error", $"#error {context} {exception.Message} | Logfile: {Options.LogPath}");

return new GeneratedSource(templateString, classDeclaration.Identifier.Text);

}

Currently we cannot navigate to generated files because IDE support is not finished

Debugging

Debugging is also useful in first stage of development or when we have some weird situation and logging is not enough. Luckily debugging is really easy - all we need to do is to add

1

Debugger.Launch();

but to do it more pro we can do it behind some configuration so when SourceGenerator_EnableDebug is set debugging is enabled for all SG’d and when use SourceGenerator_EnableDebug_SGType when SgType is type of our SG then only for this generator debugger will be attached. When setting this when we building our code there will be a prompt with question what VS to use and then we can debug our code.

Important note - generator code is reload when VS is loading - when we change it sometimes we have to reload VS to see effects. This is another reason why writing most of the code in UnitTest is so important.

IDE support

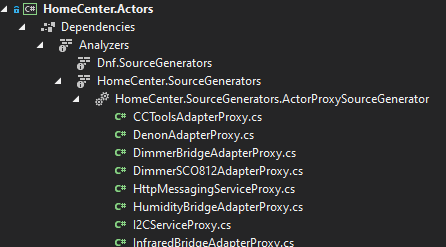

In new versions of Visual Studio (from 16.9+) IDE support is getting better for each version. Generated files can be found in Analyzers node of Source Explorer

Additionally from 16.10 we can debug our SG project by adding IsRoslynComponent property

1

2

3

<PropertyGroup>

<IsRoslynComponent>true</IsRoslynComponent>

</PropertyGroup>

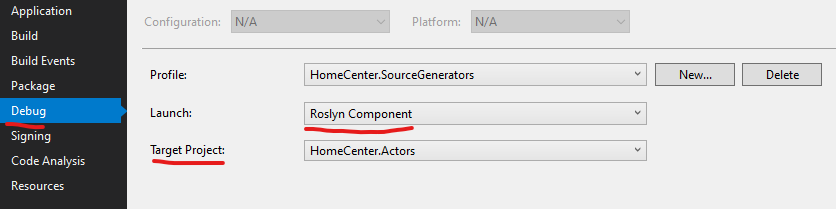

we can configure our project to run SG for selected target project

Nuget package

For help working with Source Generators I have created nuget package with code helpers described above

https://www.nuget.org/packages/Dnf.SourceGenerators/1.0.0 https://www.nuget.org/packages/Dnf.SourceGenerators.Testing/1.0.1

ResourceReader.GetResource should be invoked with type parameter from where templated are embedded

Summary

I hope I have described whole process of using generating code - one thing that lacks is using CG with nugets which maybe I will describe in future posts. You can also read about this in links below.

Useful links

- https://github.com/dotnet/roslyn/blob/master/docs/features/source-generators.cookbook.md - Microsoft Examples

- https://github.com/lunet-io/scriban - Scriban docs

- https://github.com/amis92/csharp-source-generators - resources collection

- https://github.com/dotnet/roslyn/projects/54#card-38011524 - SG planning board

- https://www.cazzulino.com/ThisAssembly/ - another real world example

- https://jaylee.org/archive/2020/09/13/msbuild-items-and-properties-in-csharp9-sourcegenerators.html - accessing MsBuild

- https://jaylee.org/archive/2020/10/10/profiling-csharp-9-source-generators.html - Profiling SG

- https://github.com/ignatandrei/RSCG_Examples - Examples